A first part of the work was devoted to the integration of depth in the computational model build around conspicuity and saliency maps. It required a specific study of various features of the depth component. In this respect, three components were chosen and analyzed. A comparison of their respective usefulness was established and one component was selected for further practical work. This analysis reveals nevertheless a deep lack of fundamental knowledge in this field.

Finally, the whole was assembled into a multimodal visual attention model that considers intensity, color and depth. To our knowledge, it is the first real attention model to consider also depth.

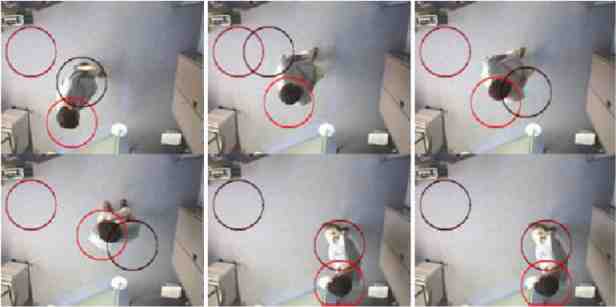

Hall attention task: This task is carried out in a controlled environment, which holds for typical scene of a hall. In this task, the camera is placed on the ceiling and the field of view is oriented towards the ground. This specific configuration has the advantage to produce important signals for people walking by. It is therefore a good example for configurations that can take advantage of depth perception. The experiments concern the detection of objects and people. Again, the results show the good potential of the developed attention process. Specifically, the experiments confirm that the competition principle is working at satisfaction. This principle is intimately built into the attention model and acts in order to balance the contributions of each single mode.

Figure 1: Object detection by the visual attention simulator that considers both contrast and depth

Exhibition attention task [2]: This task is carried out in the real environment of the exhibition. It consists in finding the most salient locations in the camera field of view. Specifically, isolated 3D objects like tables give rise to attention spots. Locations or people wearing contrasted clothes are detected. Other objects like colorful flower are often the source of the detected attention spots.

Real-time depth-based multimodal attention was demonstrated at the Computer 2000 exhibition [2]. The presented system consists of a fast PC and a 3D camera. The 3D camera captures the environment and the resulting color and depth images are sent to the multimodal attention process running on the PC. This process implements the attention model developed during this project. It thus considers the various modes of perception, in this case the intensity, the color and the depth.

This demonstration required considerable software porting and development work, especially in order to fulfill the required real-time constraints. It relies on the use of a Triclops camera system and a powerful PC.

Multimodal adaptive segmentation and coding

The usefulness of multimodal attention led us to consider and analyze its potential to contribute to other tasks. Two tasks were considered.

Adaptive segmentation task [3]: The idea is to use multimodal attention for improving image segmentation methods based on the seeded region-growing algorithm. In essence, it consists in using as seeds for the region growing, the very specific and conspicuous attention spots delivered by the multimodal attention process. The first results obtained speak for the good potential of this approach. [3].

|

|

|

| Three first spots of attention | Original compression at first spot | Modified compression at first spot |

Figure 2: The saliency map modulates the compression factor

Adaptive coding task [4]: The idea is to use multimodal attention for improving the subjective quality of coded images. In essence, it consists in using the saliency map - the map of multimodal attention - as a value that locally modulates the compression factor in order to improve location with higher attention at the cost of some degradation of locations with lesser attention. Encouraging results were found so far [4].

[1] N. Ouerhani & H. Hügli, "Computing visual attention from scene depth", Proc. 15th Int. Conf. on Pattern Recognition, ICPR 2000, Barcelona 3-7 Sept. 2000, IEEE Computer Society Press, pp. 375-378

[2] Computer-Expo 2000, Palais de Beaulieu, April 2000

[3] Nabil Ouerhani, Neculai Archip, Heinz Hügli & Pierre-Jean Erard, "Visual Attention Guided Seed Selection for Color Image Segmentation", Conf. Computer Analysis of Images and Patterns, CAIP'2001

[4] N. Ouerhani, J. Bracamonte, H. Hügli, M. Ansorge & F. Pellandini, "Adaptive Color Image Compression Based on Visual Attention", Conf. ICIAP 2001, Palermo

hu / |