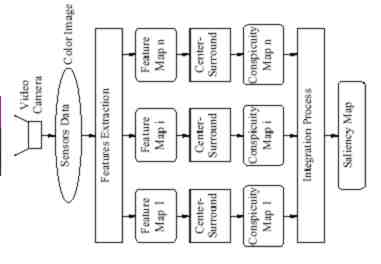

Cue level. The purpose of the cue level is to provide the cue signals. It is planned to practically work with the same cues as the one already selected by CSEM for its architecture, i.e. gradient magnitude and direction as well as their time derivatives. Activities at this level are less fundamental and concern mainly the development of experimental tools.

Focus of attention level. The purpose of this level is to combine the different cues in order to extract the most conspicuous information. Relevant cues and significant locations are made available in a timely ordered fashion according to their importance. Significant developments are planned at this level in order to translate the known methods and relatively heavy algorithms to hardware which offers limited computational power.

|

|

| Figure 1 Multicue visual attention model | Figure 2 Three main spots of attention of a dynamic real scene |

Another aspect of main concern is related to the natural capacity of the human visual system to process visual information in channels with different spatial sensitivity. This calls for an extension of the visual attention model to treat multiple spatial scales. Practically, this is realized by representing each visual feature by a vector, where each component represents the feature at a given scale.

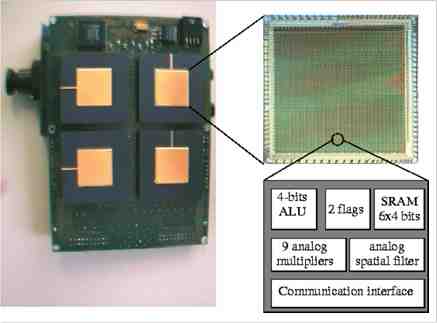

Figure 3. Real-time visual attention implemented on a prototype SIMD architectur

ProtoEye is a CSEM image processing ASIC based on the principle of SIMD. It consists of a 35 x 35 array of mixed analog-digital cells. The digital part of a cell, working on 4-bits words, performs all operations needed to transform single images and to combine pairs of images. The analog part is composed essentially of a diffusion network which efficiently performs the time consuming task of low and high-pass spatial filtering of images. Thus, a processor is assigned to each pixel of the image. Four ProtoEye chips are connected together to process 64 x 64 gray level images, provided by a CMOS camera. The whole architecture is controlled by a general purpose microcontroller (sequencer) running at a frequency of 4 MHz, yielding an effective performance of over 8 Giga operations per second. In addition to its high performance, the image processing platform is fully programmable.

[1] N. Ouerhani and H. Hügli, "Computing visual attention from scene depth", Proc. ICPR 2000, IEEE Computer Society Press, pp. 375-378, Sept. 2000

[2] Nabil Ouerhani, Neculai Archip, Heinz Hügli & Pierre-Jean Erard, "Visual Attention Guided Seed Selection for Color Image Segmentation", Proc. Conf. Computer Analysis of Images and Patterns, CAIP'2001, September 5-7, 2001, Warsaw, Poland, Lecture Notes on Computer Science, Springer Verlag, LNCS-2124, pp. 630-637

[3] Nabil Ouerhani, J. Bracamonte, H. Hügli, M. Ansorge & F. Pellandini, "Adaptive Color Image Compression Based on Visual Attention", Proc. 11th Int. Conf. on Image Analysis and Processing, ICIAP 2001, 26-28 Sept. 2001, IEEE Computer Society Press, 2001, pp 416-421

[4] H. Hugli, N. Ouerhani & P.-Y. Burgi, "Multicue Visual Attention", CSEM Scientific and Technical Report 2000

hu / |